Weight, complexity, and NASA-TLX

Exploring game complexity and effort with the NASA-TLX assessment tool

Welcome to Skeleton Code Machine, a weekly publication that explores tabletop game mechanisms. Spark your creativity as a game designer or enthusiast, and think differently about how games work. Check out Dungeon Dice and 8 Kinds of Fun to get started!

Last week we looked at Trophy Dark and luck mitigation, specifically how it uses re-rolls with a cost.

This week we are exploring how a NASA workload assessment tool can be applied in the design and testing of tabletop board games and TTRPGs. Much like the components as storage idea, it’s something I’ve been thinking about for a while and am not sure that I’ve seen it discussed.

Want to curse some items? I just released Caveat Emptor, a solo journaling game where you are an Assistant Demon selling cursed items to unsuspecting customers.

Available now as a Digital PDF or Print Pre-Order.

NASA Task Load Index (TLX)

Having the ability to objectively and quantitatively assess the difficulty of tasks is critical in spaceflight research.

As part of the Human Performance Research Group at NASA’s Ames Research Center, Sandra Hart and Lowell Staveland conducted a multi-year research program to “identify the factors associated with variations in subjective workload within and between different types of tasks.”

Their paper, Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research (Hart & Staveland, 1988) has become an important work in the field of human factors research.

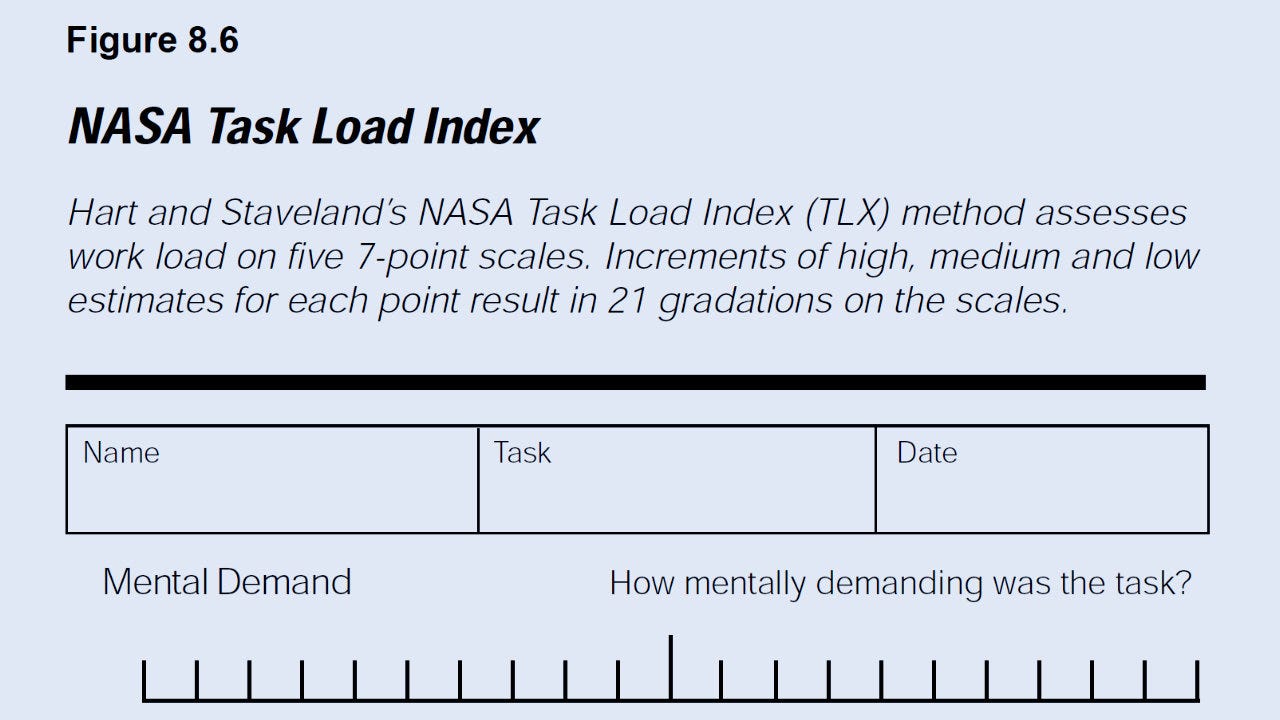

The NASA-TLX assesses the perceived workload of various tasks based on six subjective scales:

Mental Demand (Low/High): How much mental and perceptual activity was required (e.g. thinking, deciding, calculating, remembering, looking, searching, etc.)? Was the task easy or demanding, simple or complex, exacting or forgiving?

Physical Demand (Low/High): How much physical activity was required (e.g. pushing, pulling, turning, controlling, activating, etc.)? Was the task easy or demanding, slow or brisk, slack or strenuous, restful or laborious?

Temporal Demand (Low/High): How much time pressure did you feel due to the rate or pace at which the tasks or task elements occurred? Was the pace slow and leisurely or rapid and frantic?

Performance (Good/Poor): How successful do you think you were in accomplishing the goals of the task set by the experimenter (or yourself)? How satisfied were you with your performance in accomplishing these goals?

Effort (Low/High): How hard did you have to work (mentally and physically) to accomplish your level of performance?

Frustration (Low/High): How insecure, discouraged, irritated, stressed and annoyed versus secure, gratified, content, relaxed and complacent did you feel during the task?

Implementation of the NASA-TLX uses a two-part evaluation process:

Pairwise comparisons: Participants in the study compare the various scales (e.g. Mental or Physical) in pairs (i.e. two at a time), stating which one they feel contributes more to the overall load of the task. The fifteen pairs are compared and the score is the number of times a specific scale (e.g. Mental) was chosen.

Load ratings: Each scale is given a numerical rating by the participants using a printed scale either during or after each task, presented as a line divided into 20 equal intervals with tick marks. This allows the participants to easily mark a line on the scale that can easily be converted into 0-100 quantitative data.

Sometimes the pairwise comparisons are skipped and other times they are only conducted once at the beginning of a larger study. There is a simple weighting and averaging procedure that is then used to combine both parts.

When combined, the pairwise comparisons and load ratings can give an accurate, repeatable picture of a task’s workload as perceived by the person doing to the task.

Broader applications of NASA-TLX

According to Google Scholar, the original NASA-TLX paper has been cited over 17,000 times. In 2006, Sandra Hart wrote a paper, NASA Task Load Index (NASA-TLX): 20 Years Later (Hart, 2006), reviewing how the NASA-TLX has spread well beyond its original goals and intent. Her paper is a survey of 550 studies which used the NASA-TLX in fields well beyond the original application of aviation and spaceflight.

It’s easy to see how this could be used in various fields:

Human-Machine Interface (HMI) design in industrial settings

Assessing workloads in healthcare for surgeons and nurses

Education and training development, especially when conducted online

So can we use this method in tabletop board game and roleplaying game design?

The workload index of tabletop games

Playtesting is a complicated topic. There are entire books written on the subject. How you conduct your playtesting depends on so many things, including the types of fun you want your players to have, what medium you are using, and a hundred other factors.

That said, it’s really interesting to think about using a simple six-scale system to generate quantitative data for your game.

Adapting the first three NASA-TLX scales to gaming, we can look at the following:

Mental Demand: How mentally challenging is the game? Does it require deep strategies or is it simple and tactical? Are there many rules to remember or is it easy and intuitive? Does it present hard puzzles to solve or is it more of a social experience?

Physical Demand: How involved is the setup and takedown of the game? Are game components easy to sort, access, and use during the game? Are components “fiddly” and require lots of “touches” during the game? Does the game rely on speed or dexterity to win?

Temporal Demand: How is time perceived during the game? Do players feel rushed and unable to form a strategy? Is there too much or too little downtime?

Games that incorporate real-time mechanisms would also impact the player’s temporal demand rating:

In real-time games, players are encouraged to take their turns as quickly as possible, often simultaneously. In some real-time games, a player is penalized if they don't do something within a set amount of time.

Games like Exit: The Game from Thames & Kosmos use an actual stopwatch to judge the player’s performance at the end of the game. Space Alert has all actions happening in just 10 minutes of actual time. Shadowdark RPG uses actual timers for torches, with one torch lasting about an hour of real time. ARC uses a real-time Doomsday Clock which ticks every 30 minutes of actual play.

Measuring effort and frustration

The other three scales can similarly be adapted:

Performance: Did the players feel they successfully met the objectives of the game? Did they feel the game too hard or too easy? Were victory points or other goals achieved?

Effort: How much effort was required to play the game? Was this a relaxing game to play at the end of the day, or a game that is more of a special event?

Frustration: Did the rules feel like they were easy to understand, or did they require constant clarifications? Did players make a lot of mistakes when trying to follow the rules? Did the rules and mechanisms feel fair? Did the amount of time and effort put into the game feel worthwhile?

Certainly most playtesting forms will touch on these ideas, but it is really interesting to consider stating them explicitly. It can be easy to miss these “big picture” aspects when asking questions about mechanisms and rules.

At the end of the game, how did the players feel? Were they frustrated? Did they think it was worth it?

Players might not want to write honest answers to those questions in a text-based form, but when they are required to put a line on a 0-100 scale you might get a real answer.

NASA-TLX vs. BGG Weight

In the world of board games, there is a term called “weight” which describes the complexity of a game. In simple terms, a really complex game would be called “heavy” while a simple game would be called “light.”

The actual meaning of board game weight has, however, more nuance than that. It is more than just how hard a game is to understand. In fact the BGG wiki and documentation clearly state that weight is not clearly defined.

Instead BGG offers a number of questions that can be used:

How complex/thick is the rulebook? How long does it take to learn the rules?

What proportion of time is spent thinking and planning instead of resolving actions?

How hard and long do you have to think to improve your chance of winning?

How little randomness is in the game?

How much technical skill (math, reading ahead moves, etc.) is necessary?

How many times do you need to play before you feel like you "get" the game?

Those questions should guide the user’s rating on a 1-5 point scale, with 5 being the heaviest game. For reference, On Mars (Lacerda, 2020) has a weight of 4.67 and Catan (Teuber, 1995) has a weight of 2.29 out of 5.

There is overlap between the concept of weight and the NASA-TLX workload. Both try to get at something deeper than just easy to measure metrics like “time to complete” or “points achieved.” Both try to take messy feelings and intuition and compress it into a numeric scale.

It’s a good example of how this information can be useful. From surveying hundreds or thousands of users, the BGG weight rating allows potential players to compare games. While two games with 4.23 and 4.56 weights might not be meaningfully different, two games with vastly different weights (e.g. 1.20 vs. 4.21) will almost certainly be different.

Is it perfect? No.

Still, as goes the saying attributed to George Box: “All models are wrong, but some are useful.”

Perceived workload

It’s important to note that the NASA-TLX measures perceived workload and that it is not an external measure of the workload. In the same way, the Performance metric is measured based on how the participant felt they performed versus actual objective performance.

To measure actual task performance, you’d need to use a different measure such as the time required to complete a task or accuracy/error rate.

Conclusion

Some things to think about:

NASA-TLX has potential applications in game design: While it probably can’t be used exactly as published, it can be adapted to work. It’s an interesting design that is backed up by decades of research. There is even an iOS app for it.

Messy concepts can still be measured: NASA-TLX, board game weight, and other metrics aren’t perfect. They are abstractions of complex ideas at best. Yet we can still try to measure them and that data can be useful.

Human factors engineering (HFE) is really interesting: HFE applies principles from psychology and physiology to the design of systems. My first introduction to it was Sidney Dekker’s Field Guide to Understanding Human Error, a book I highly recommend.

— E.P. 💀

P.S. Want to make your own TTRPG? Pre-orders are open now for Make Your Own One-Page Roleplaying Game, a guide from Skeleton Code Machine! Pre-orders close next week, so don’t wait!

Skeleton Code Machine is a production of Exeunt Press. All previous posts are in the Archive on the web. If you want to see what else is happening at Exeunt Press, check out the Exeunt Omnes newsletter.

I teach engineering classes with students on occasion, this could be a great reflection tool after projects!

I love the aside. Oh, hey, I just released a thing, but never mind that, let's dig into astronaut stuff and how it can be applied to game play. :)